SDXL 1.0 is an open image generator that has launched on various platforms after the research version.

In an announcement yesterday, Stability AI (the company behind Stable Diffusion) released an open model SDXL 1.0, dubbing it the “next iteration in the evolution of text-to-image generation models.” It’s the flagship image generation model tested against various other models of the company with a remarkable improvement.

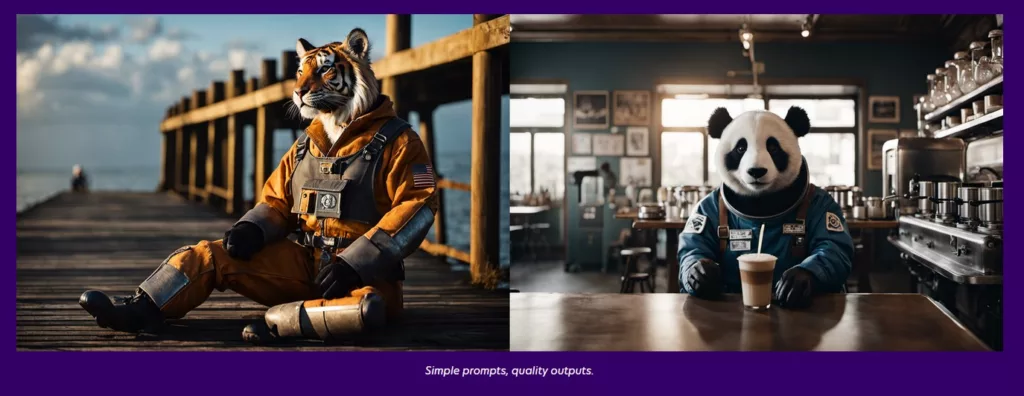

The model can generate high-quality images in virtually any art style and is a great tool for photorealism. It’s also well-tuned for vibrant and accurate colors, better contrast, better lighting, and richer shadows.

Also, the model can generate hands, text, or spatially arranged compositions really well while being able to understand the difference between concepts in a better way.

SDXL is focused on creating more beautiful and richer artwork with simple prompts. Under the hood, it’s trained on 3.5B parameters and a 6.6B parameter refiner. In comparison, DALL-E 2 has 3.5B parameters and 1.5B parameters for enhancing the resolution. Midjourney is trained on 5B+ parameters.

Fine-tuning and advanced control have also improved considerably compared to previous Stability AI models such as SD 1.5 and SD 2.1 and will be launched soon.

It’s available on AWS Sagemaker, AWS Bedrock, and Clipdrop, and has a GitHub page with the source code. The model is also available on Hugging Face. The license is a CreativeML Open RAIL++-M, granting users a “perpetual, worldwide, non-exclusive, no-charge, royalty-free, irrevocable copyright license … to make, have made, use, offer to sell, sell, import, and otherwise transfer.”