Is a time coming when ChatGPT will be “competing” with Q&A and advice communities and there will be a struggle to retain users?

There are many thriving communities online that could be negatively affected by ChatGPT’s proliferation. This includes subreddits like Explain Like I’m Five and Relationship Advice, Stack Exchange, Quora, and some domain-specific portals such as those for math, English, or history. Is a time coming when ChatGPT will be “competing” with these communities and there will be a struggle to retain users?

Images used in Case Studies are AI-generated mainly, including from Midjourney, Dall E, Stable Diffusion, Niji Journey, etc.

More specifically, where will we go to get our information regarding relatively well-established fields? A user with a high school grade science question or someone suddenly conscious about their health will find ChatGPT faster and more polished, for example.

First of all, I hate to put Reddit, Quora, and Stack Exchange in the same basket.

They are vastly different and one is superior to another in certain subjects. The scope of this case study is to focus on all online communities that give advice and answer questions in fields already well-documented and well-understood, and many Stack Exchange network sites do just that, and so do many subreddits, and a good portion of Quora. Even if I’m not mentioning other forums by name, they are included in this basket. These forums can be about anything – internet marketing, PC gaming, health advice, or options trading. This does not include the majority of high-quality and detailed answers given by professionals on Stack Exchange, Reddit discussions grounded in experience and understanding, or answers by engineers with over a decade of experience on Quora.

It’s still too early to comment on something like this. But it’s an issue now being publicized more and more. Let’s take a small example.

r/Relationship_Advice

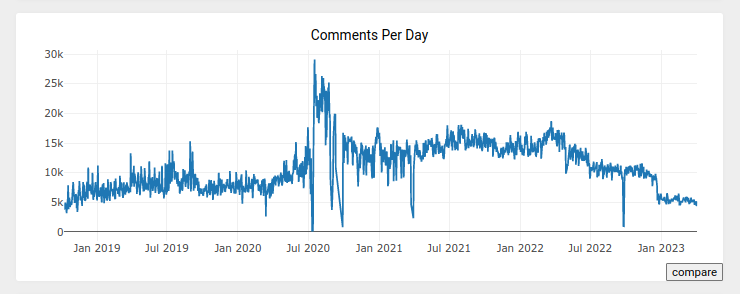

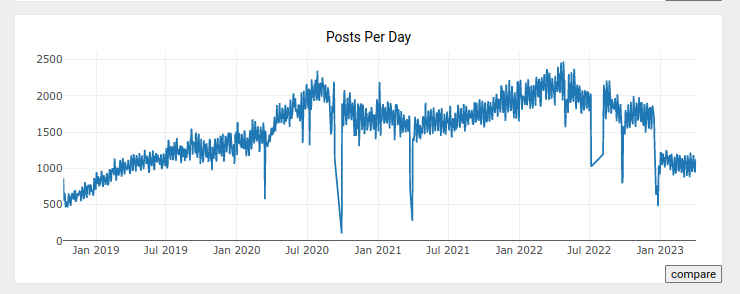

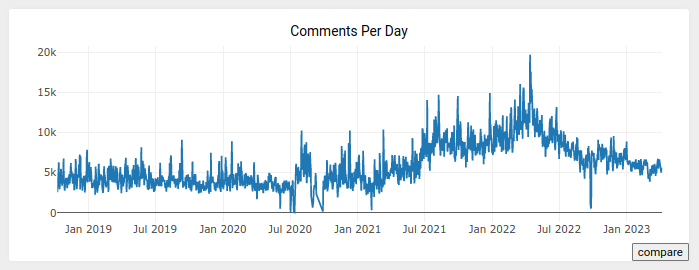

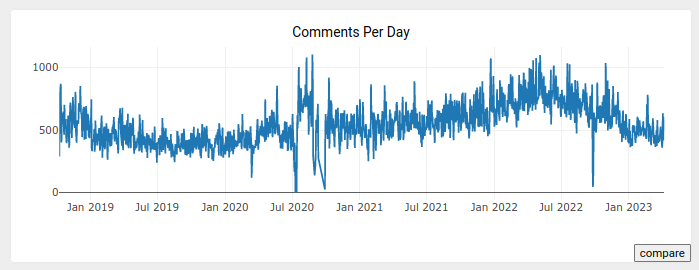

There has been a notable dip in the comments and posts per day in the subreddit r/relationship_advice. It’s a subreddit that people use to ask questions related to relationships, friends, family, and human emotions in general.

Reddit might not be the best place for such advice to begin with, but the sudden dip in its stats tells a story yet to unfold.

Comments per day and posts per day stats taken from here. Notice the dip in the end on the label “January 2023.”

ChatGPT released back on November 30, 2022 but it can be argued that it took us a while to fully understand its capabilities and a major shift occurred in 3 months time when its popularity exploded in January 2023.

Mere coincidence?

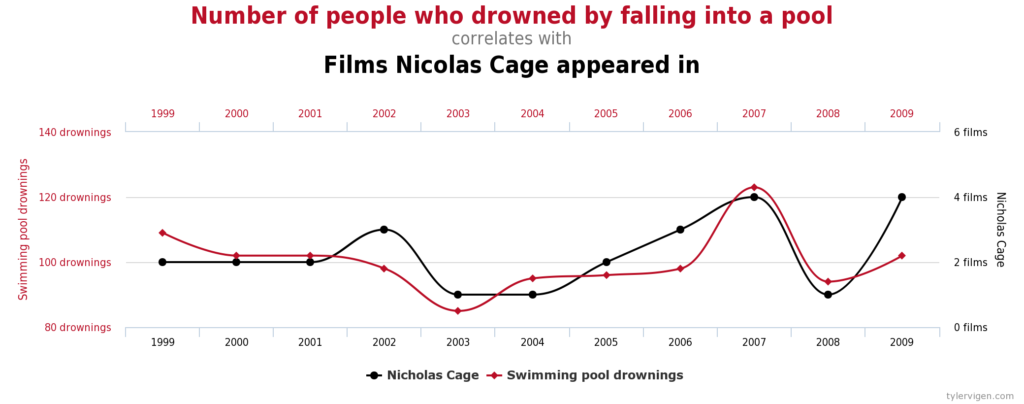

Correlation doesn’t imply causation. It’s an idiot’s errand to look at a chart which aligns with an event and pin it to be its cause. These charts alone are not sufficient, otherwise Nicholas Cage’s movie appearance must be influencing swimming pool drowning related deaths by some quantum magic.

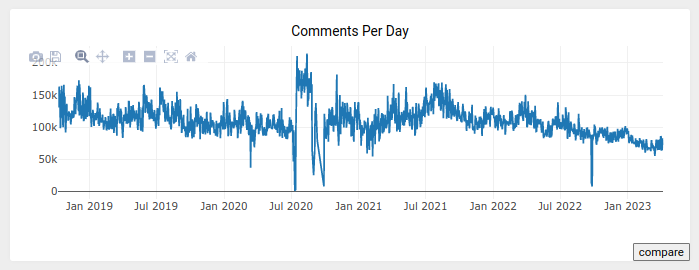

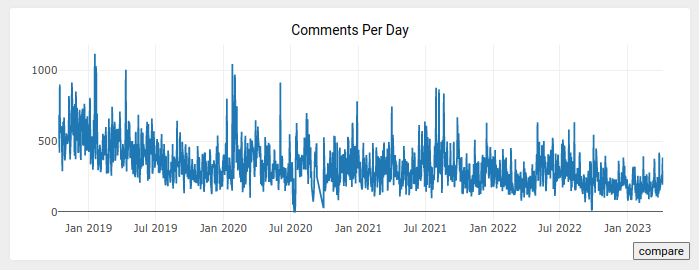

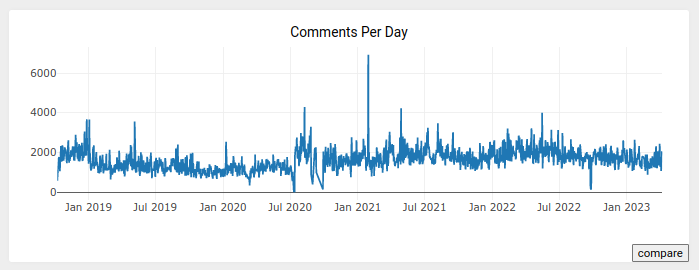

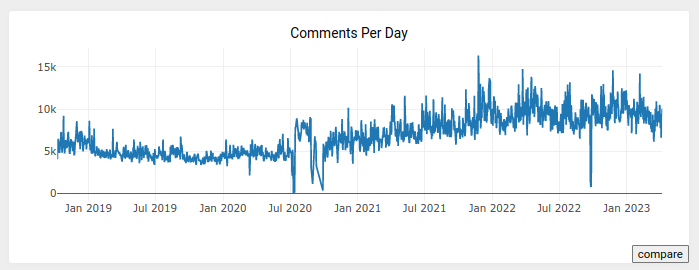

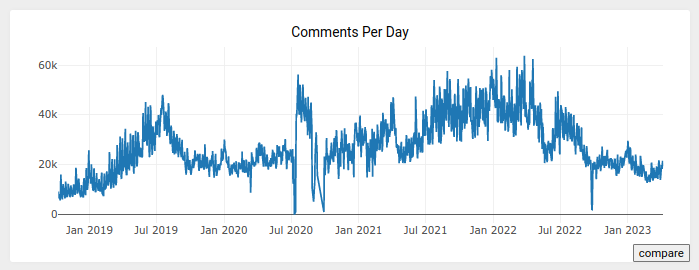

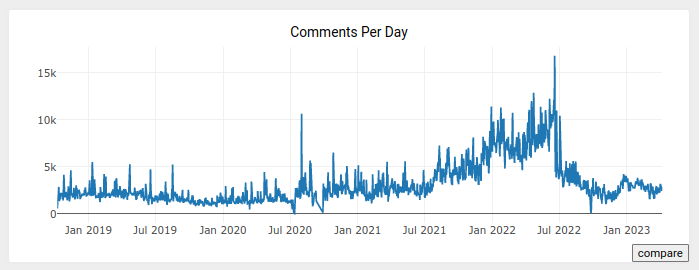

Here are the stats for a few other subreddits that are relevant to our case study. Note that the dips aren’t as pronounced even in the few cases where they do exist.

It is safe to assume that it’s currently a mere coincidence or that ChatGPT has become too good in giving relationship advice lately.

The fact that the responses are heavily influenced by what you want it to say is not a good sign. If you want it to argue in favor of allowing ChatGPT answers on Stack Overflow questions, it will give you a case for that. If you want it to argue against that, it will give you just that. That’s not how experts work.

But my main focus is not today’s ChatGPT. It’s tomorrow’s.

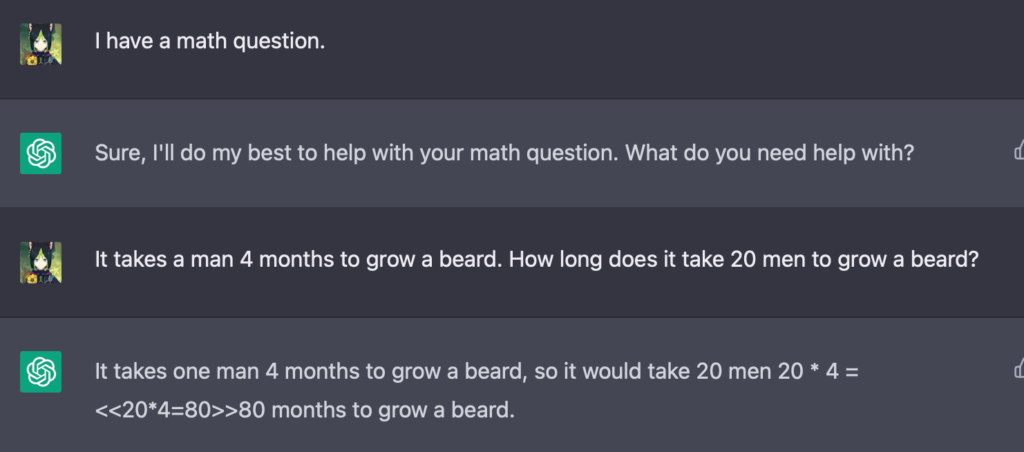

In an answer that explains why ChatGPT cannot replace people answering questions on Quora, a user shows this image as an analogy.

Sure, ChatGPT is dumb. Just by telling it that it’s a math problem, it becomes attuned to solving it the math way. But it’s been a few months since that answer was posted. It’s not dumb anymore.

ChatGPT effectively rendered the user’s pointing out of this drawback partly incorrect in 3 months. As ChatGPT is fine-tuned further, a lot of its current criticisms and why it will not replace other platforms will also be “fixed.” And that’s where trouble could start.

There is a lot where ChatGPT fails, categorically organized in reasoning, logic, math and arithmetic, factual accuracy, bias and discrimination, wit and humor, coding, spelling and grammar, self awareness, ethics and morality, and other kinds of errors in a recent study.[1]

But what’s surprising is that a lot of these failures are already fixed with GPT-4 and regular updates.

Is it a legitimate threat?

ChatGPT cannot understand human output. It’s trained on what’s already written and tries its best to stitch together words and predict what should come next in a sentence based on historical data. Incidentally, it cannot truly understand your feelings or give personal experiences to help guide you.

But let’s talk about other topics such as advice on diets or even minor medical issues, issues with your pets, history of a place, a scientific theory, and so on.

These are very likely to not need human feelings anyway to explain.

Let’s go over a few examples:

- You provide a description of your lifestyle and eating habits, and ask GPT-4 to prepare a dietary plan for you. On average, this is going to be better and more accurate than a local nutritionist or dietitian. The same applies for minor health issues, pet issues, or travel questions.

- You are planning to visit a well-known tourist spot, town, or country and want a summary of what to expect. If you simply ask around or online, you will get a lot of varying opinions because most answerers will be tourists themselves. To sift through it all will quickly become tiring. In this case, ChatGPT can provide remarkable value by giving you pros and cons, comparisons, and even what to expect on your journey.

- From science-related Stack Exchange websites to subreddits, a lot of people look for simple answers to big questions or an explanation of complicated theories and concepts. A professor might be able to explain better in a one-on-one interaction, but ChatGPT can easily compete with, let’s say, the Explain Like I’m Five subreddit.

Current limitations (especially of the free ChatGPT preview) are also holding back its true potential.

OpenAI is developing ChatGPT at a fast pace. It can write working code and troubleshoot technical issues. With increasing accuracy, it’s easy to believe how in the next 1-2 years, we will not be relying on human-based communities as much (and even less on social interactions) to get our information.

The community & trust

Most importantly, ChatGPT can never mimic the sense of community and belonging you get. It can never replace the higher order of trust we associate with a specialist who has legit credentials. ChatGPT is simply a different type of tool than online communities.

But if community and trust are our only weapons against it, we might be opening a whole another can of worms: division of all online platforms into those that are community and trust only, leading to the death of other platforms that are a mixed bag.

For example, if ChatGPT grows to seriously threaten the activity on Q&A platforms, then the community-building aspect of those platforms will be captured instead by social networking sites and platforms like Discord which are more convenient and targeted for such purposes as it becomes less and less active on, let’s say, r/AskScience and Quora.

A clear distinction between platforms that offer community, trust, viewpoints, and experiences (like Discord or social networking sites) and more advanced AI chatbots might leave mixed platforms (like Quora and Reddit) with a conundrum.

A second way out?

If indeed the fears of ChatGPT or its competitors’ next versions threatening the readership and activity on Q&A and discussion communities are subscribed to, we’ll have a second way rather than to just plain fight.

And that will be integration.

ChatGPT can be easily integrated on any website. When a platform that relies on users asking and answering questions or going on long informative discussions finds out that ChatGPT is eating away its userbase, a second way out will easily be to incorporate ChatGPT (or another AI chatbot) on its own platform to retain the traffic.

This helps them keep serving ads while allowing the user to stay in known territory.

Quora’s Poe

Quora has already launched Poe a little over a month ago. It’s an AI chatbot based on other AI chatbots including ChatGPT and Anthropic. And they have plans to scale it up, providing an option to choose among top AI chatbots in the world.

Though this is not integration per se, it is indeed a step in the general direction of adapting and not fighting ChatGPT’s success.

In fact, Poe is just waiting to get good enough, basically, and will be incorporated on the main Quora platform when it’s ready. To quote Quora’s CEO:

… we will evolve Quora itself as AI enables better experiences, and we will distribute content created on Poe on Quora when it meets a high enough quality standard.

Adam D’Angelo, Quora

Reddit’s comment on AI

In a discussion with The Verge, Reddit spokesperson Nick Singer said the following:

AI chatbot technologies are still new and something we’re exploring and keeping our eyes on. Though, there will always be a need for genuine community and human connection, which can be aided by tools like this. We see chatbots being used in fun and innovative ways to complement community and human connection — not replace it.

Nick Singer, Reddit

The report ends with the author remarking that the statement also said it’s exploring AI chatbot technologies.

Stack Overflow’s ban of ChatGPT

Stack Overflow is the main Stack Exchange community focused on programming. There has always been a concern about the lack of verification because let’s face it, if ChatGPT generates plausible but low-quality code and it’s not tested (or if the OP has no clue about the topic themselves), then it’s not only a wastage of time but actively harmful for the community.

Posting AI-generated content from any generative AI including GitHub Copilot and ChatGPT without validating it is temporarily banned on Stack Overflow because “the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking and looking for correct answers.”

Note that the larger Stack Exchange network has no such strict rule forbidding AI usage.

Research into ChatGPT’s answering capabilities

ChatGPT has been meticulously studied by the academia. Here are some extracts relevant to our problem at hand:

- “Our comprehensive evaluation included 450 questions against different domains and KGs. We evaluated the correctness by manually comparing the generated answers to the ground truth. Through this process, we recognized that ChatGPT has a perfect understanding of questions, even for those it fails to answer.“[2] The study further notes that correctness is lower than KGQAN, but there is substantial robustness, determinism, explainability, and understanding.

- “ChatGPT exhibits a stability of approximately 79.0% in complex question answering tasks.”[3]

- “It is worth acknowledging that its capacity to emulate human-like responses is nothing short of extraordinary. Broadly speaking, the ChatGPT response commonly aligned with key research themes in the literature. Despite this, a major criticism of its current design is the absence of evidence to support its output. As it currently stands, ChatGPT runs the risk of positioning itself as the ultimate epistemic authority, where a single truth is assumed, without a proper grounding in evidence or presented with sufficient qualifications.”[4]

- “ChatGPT generated largely accurate information to diverse medical queries as judged by academic physician specialists although with important limitations. Further research and model development are needed to correct inaccuracies and for validation.“[5]

So, where do we stand? Is it AI vs. UGC yet?

I guess it mainly depends on the intent of the discussion or question. If a user is looking for a story, emotions, and guided troubleshooting, they will stick to current communities such as a subreddit, Quora, or StackExchange. But those looking purely for answers and polished, well-rounded information, will start to gravitate toward ChatGPT over time.

Assuming OpenAI makes considerable improvements to fight inaccuracies and hallucinations, ChatGPT responses will become more reliable quickly. The competition from other players from Silicon Valley and beyond helps accelerate their research into solving these problems.

UGC (user-generated content) communities that depend mainly on real people writing responses, helping others, and sharing stories are not threatened right now. There is no major hit on their readership. But there is a general trend of asking more and more questions to ChatGPT, which should not be ignored.

An app cannot reach 100 million active users in a couple of months if it’s not adding substantial value to the user’s queries.

Right now, anyone looking for an answer from a credible source, experts, and specialists will definitely go to the UGC channels. For example, asking for more serious travel tips or trying to fix more nuanced programming errors. But for generic questions or when one doesn’t have much time, ChatGPT is increasingly becoming the go-to option for many. This means those who Google questions and append “reddit” or “quora” at the end to get human–sourced answers might be exploring AI as an effective alternative.

References

1. Ali Borji. “A Categorical Archive of ChatGPT Failures.” Computation and Language, 6 Feb 2023, pp. 2-31.

2. Rehman Omar, Omij Mangukiya, Panos Kalnis, Essam Mansour. “ChatGPT versus Traditional Question Answering for Knowledge Graphs: Current Status and Future Directions Towards Knowledge Graph Chatbots.” Computation and Language, 8 Feb 2023, pp. 5-6.

3. Yiming Tan, Dehai Min, Yu Li, Wenbo Li, Nan Hu, Yongrui Chen, Guilin Qi. “Evaluation of ChatGPT as a Question Answering System for Answering Complex Questions.” Computation and Language, 14 Mar 2023, pp. 15.

4. Grant Cooper. “Examining Science Education in ChatGPT: An Exploratory Study of Generative Artificial Intelligence.” Journal of Science Education and Technology, 22 Mar 2023, pp. 6.

5. Doughlas Johnson, Rachel Goodman, J Patrinely, Cosby Stone, et al. “Assessing the Accuracy and Reliability of AI-Generated Medical Responses: An Evaluation of the Chat-GPT Model.” Nature Portfolio, Feb 28 2023, pp. 2.