In a gigantic mishap, Microsoft employees accidentally spilled private data alongside AI training data on GitHub with full control.

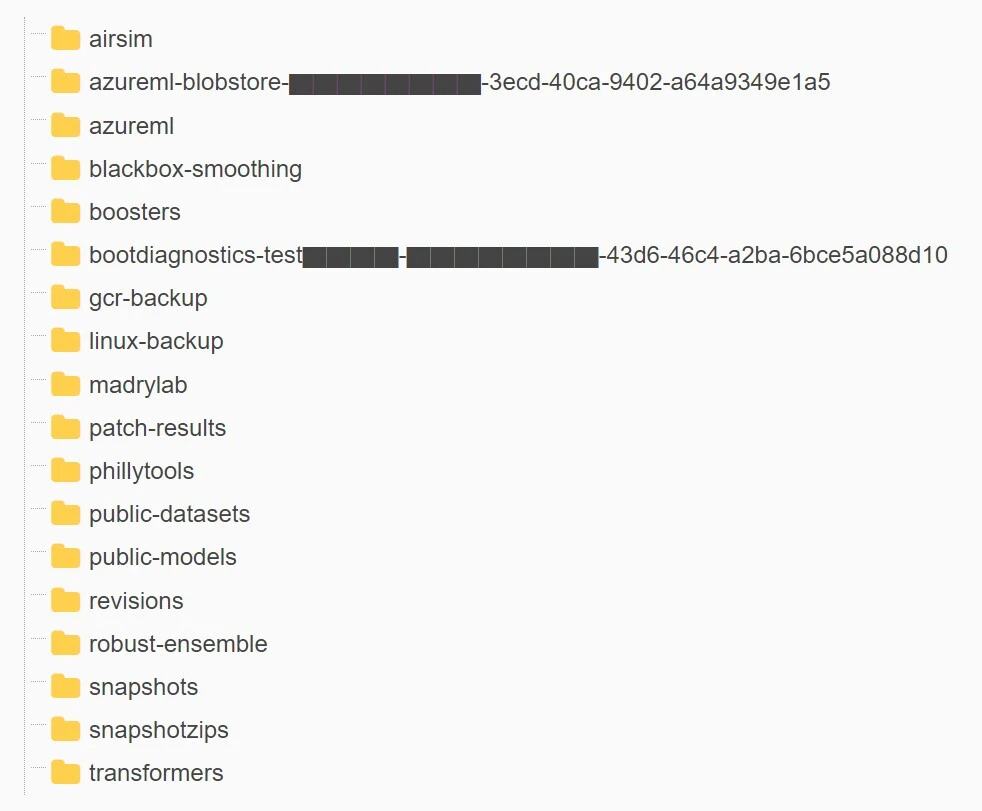

Microsoft’s AI research team was publishing routine open-source image recognition AI training data on the company’s GitHub account. Accidentally, they ended up also uploading 38TB of private data that included the entire disk backups of a couple of employees’ computers. This backup included, among other things, passwords and private keys as well as over 30,000 Microsoft Teams messages internally circulated.

The accident happened due to the team dumping the data to GitHub using Azure’s SAS tokens. Though typically teams and team leaders choose which files are to be shared, without the proper safety checks, they can easily share entire storages that were never meant to be disclosed to the public.

Wiz is a cloud security firm. The Wiz Research Team tracks the accidental exposure of cloud-hosted data. They first found the spillage on September 18 and published it in a blog post.

Noting the growth of AI in recent months, the authors commented that it’s an example of new risks that companies face when working with massive amounts of data for AI training. It’s true that the original intent was to offload AI training data, but the mishap was in no way connected to work in AI except the link that it was the AI research team that was behind the accident.

The URL shared “allowed access to more than just open-source models. It was configured to grant permissions on the entire storage account, exposing additional private data by mistake.”

The token used allowed for full control permissions instead of read-only permission and was entirely a case of misconfigured permissioning when uploading data on GitHub.