Microsoft has finally unveiled its custom AI accelerator and a processor for LLM and AI inferencing workloads.

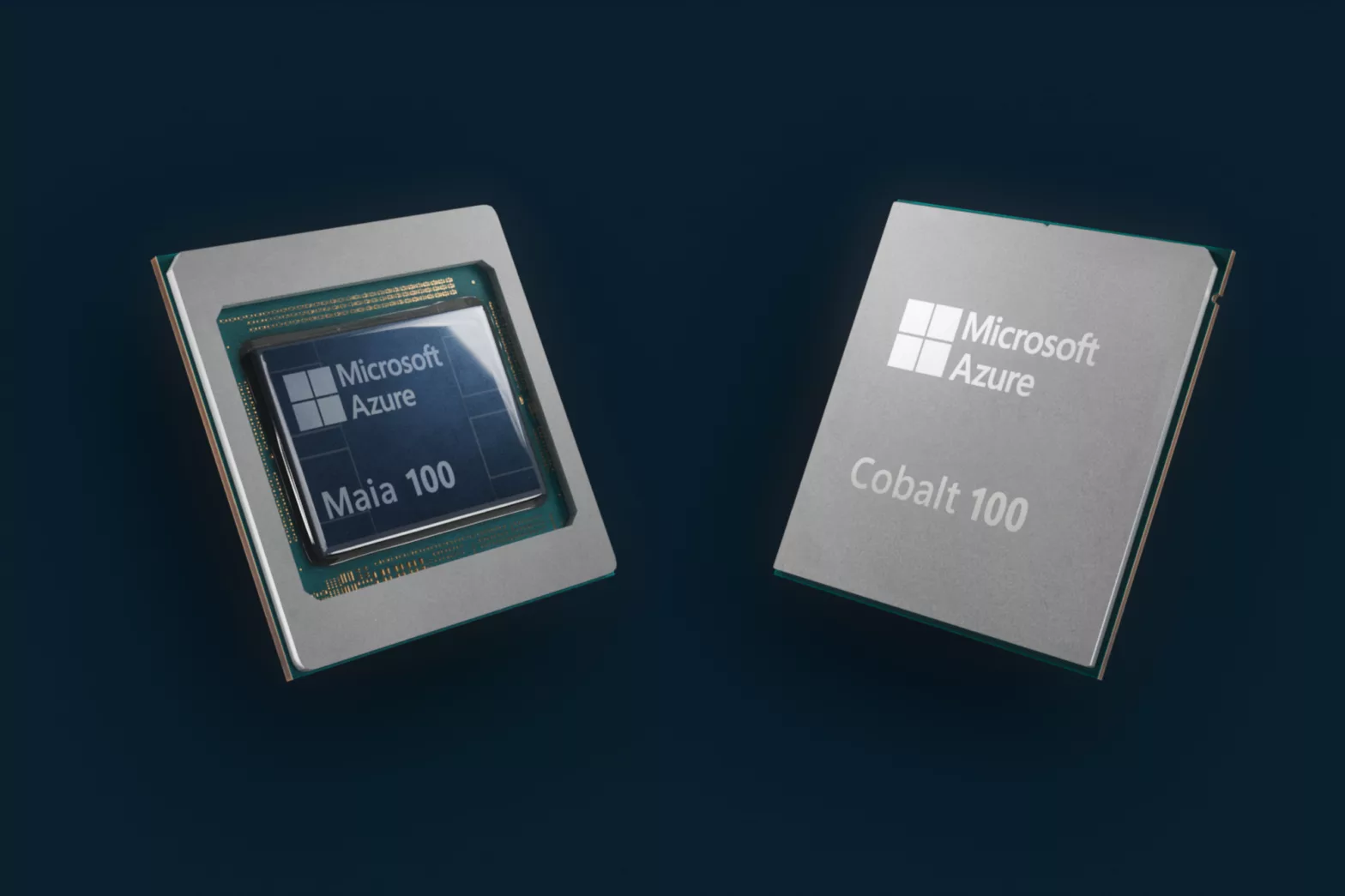

In a bid to rely less on Nvidia and manufacture its own chips for AI inferencing and other workloads, Microsoft announced a couple of advanced AI silicon technologies at Microsoft Ignite. These include the Azure Maia AI Accelerator with a 5nm TSMC process and the ARM-based Cobalt processor with 128 cores.

According to the official announcement, these chips will begin rolling out early next year to Microsoft data centers and will be used to power the Azure OpenAI service and Windows’ own Copilot app.

Whereas the Maia 100 chip will directly compete with Nvidia’s H100 GPUs, the Cobalt 100 chips will compete with Intel’s server processors.

The custom AI chip can be used to train LLMs. Currently, all training for Microsoft’s AI tools as well as OpenAI’s ChatGPT is done with the help of (expensive) Nvidia data centers. Microsoft is banking heavily on generative AI, especially for its enterprise customers who use the Azure platform. Both chips are aimed at servicing this segment specifically.

Maia 100 is currently being tested on GPT 3.5 Turbo, the same model that powers ChatGPT, Bing AI workloads, and GitHub Copilot. Microsoft is in the early phases of deployment and much like Cobalt, it isn’t willing to release exact Maia specifications or performance benchmarks just yet.

From Verge’s interview with Rani Borkar, Azure hardware systems and infrastructure, Microsoft

Companies including Microsoft, OpenAI, Meta, and Google (and virtually every other company or startup providing any kind of a custom AI solution) require GPUs from Nvidia to do the inferencing work—Training their language models that are then used by the AI tools. This has already inflated Nvidia’s market value by a lot. Companies are increasingly looking for cheaper alternatives, and working on the in-house design and manufacturing of custom silicon designed for AI workloads can be a very cost-efficient decision.