Researchers at Osaka University train Stable Diffusion to turn MRI scans into images, and the resemblance is uncanny.

Yu Takagi from Osaka University, Japan told Al Jazeera how, upon training, Stable Diffusion could form images from MRI scans that were uncannily accurate.

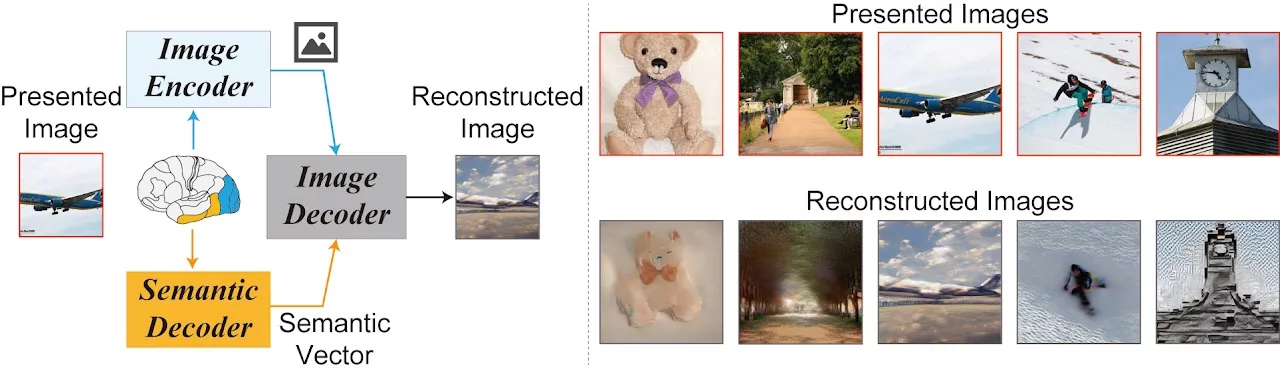

Subjects were shown certain images while inside an MRI machine for hours. Takagi and partner Shinji Nishimoto wrote a model for Stable Diffusion to take brain activity (MRI scans) and turn the data into a readable format (images). When Stable Diffusion was given the MRI scans data, it was surprisingly accurate in generating high-quality images that “bore an uncanny resemblance to the originals.”

The pictures were not shown to Stable Diffusion. Neither were these pictures available anywhere else or a part of the training data, as these were generated by Takagi and Nishimoto during the research.

Takagi remarked, “We really didn’t expect this kind of result.”

This is, however, not mind-reading. Depending on the training, Stable Diffusion simply did its job to translate data into images. Takagi himself mentions how there are many misunderstandings with their research.

“We can’t decode imaginations or dreams; we think this is too optimistic. But, of course, there is potential in the future.”

The study’s abstract reads that it’s a “promising method for reconstructing images from human brain activity.” An illustrative guide can be found on Takagi and Nishimoto’s Google Site for this study.

There are well-founded fears that such a system can indeed grow into something that can take raw data from brain activity and turn it into images. A more advanced and borderline sci-fi expectation could be a device that attaches to your head and extracts all your thoughts or memories, which can be misused or forcibly used.

That, however, is nowhere near the current reality.

The study, to be made public soon at the Conference on Computer Vision and Pattern Recognition, required the subjects to stay in an MRI machine for up to 40 hours. Models had to be created uniquely for each subject. The whole process was very time-consuming and expensive. The approach is also limited by the extent of fMRI technology, which introduces significant electrical noise into the data being recorded. Lastly, the algorithm struggled in decoding objects and often created abstract figures for the same.

They are now working on a better image reconstruction technique.

Additional coverage: AI re-creates what people see by reading their brain scans, Science.org