MTIA is an ASIC for accelerating AI workloads for Meta, which can beat traditional GPUs in efficiency.

GPUs are at the heart of AI training. Simply selling the most GPUs in the market made Nvidia a $1 trillion company (temporarily) just recently, a club reserved for Alphabet, Amazon, Apple, and Microsoft. Unsurprisingly, an ex-member of this coveted club is Meta (parent company of Facebook). Meta is one of the leading AI empires and its 65-billion-parameter LLaMA language model is open source, giving tough competition to OpenAI’s GPT and Google’s PaLM models that run ChatGPT and Bard, respectively. In fact, Meta’s sheer influence is likely the reason why OpenAI decided to launch its own open-source language model (non-competitor to GPT4).

To handle such workloads, companies leverage GPUs in data centers, often from Nvidia. But even popular GPUs used for AI training are not built for AI specifically. In fact, they can handle many other workloads. So, what if you made a chip or GPU specifically for AI? This is what Meta did.

More precisely, it’s an application-specific integrated circuit or an ASIC machine like Bitcoin mining chips that can’t do any other task, but can mine BTC insanely fast compared to traditional GPUs.

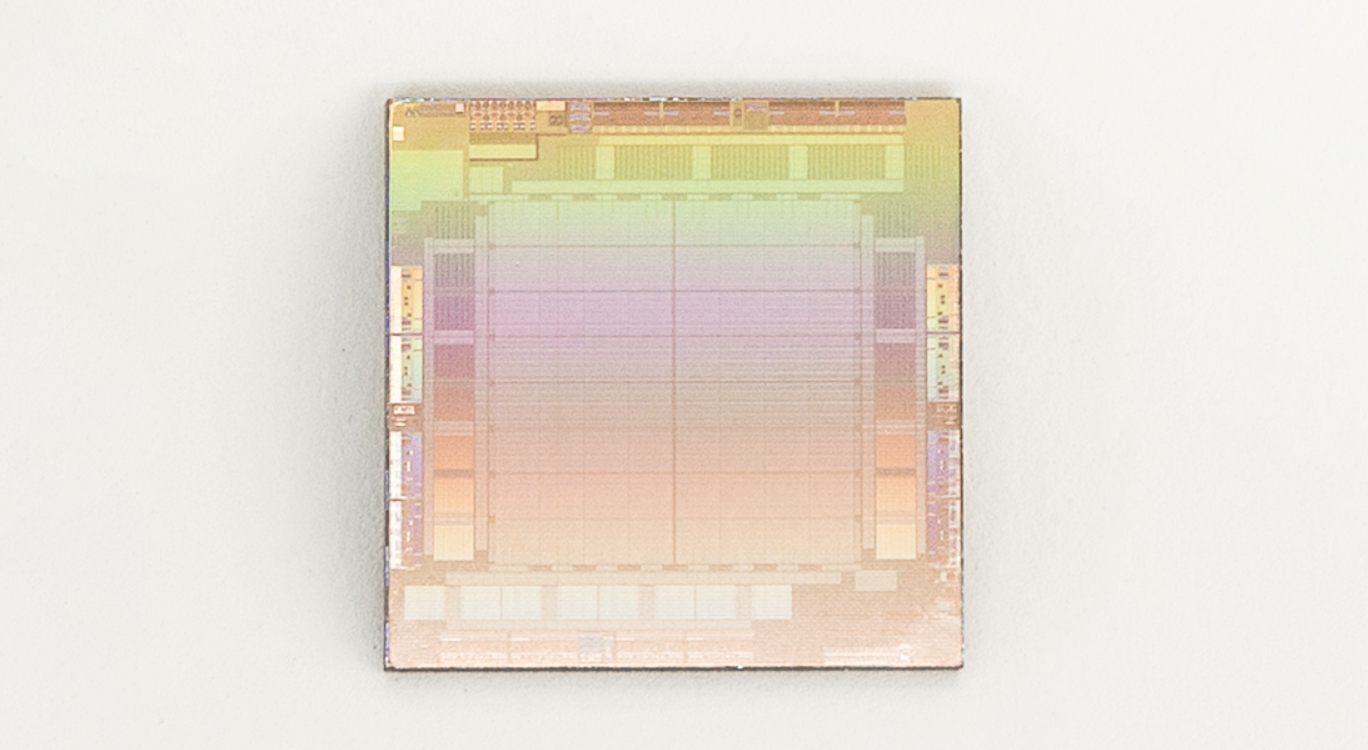

The first generation of the Meta Training and Inference Accelerator, or MTIA, are built to run Meta’s AI workloads specifically. These are significantly more efficient in doing whatever training and related AI tasks the company needs to do in order to operate utilities such as content understanding, Feeds, generative AI, and ads ranking.

Official page: MTIA v1: Meta’s first-generation AI inference accelerator

MTIA prioritizes developer-friendliness and uses PyTorch 2.0. It’s a full-stack solution designed fully in-house without any chip manufacturer’s help. Much like many modern GPUs, it uses the TSMC 7nm process node. It has an 800 MHz clock speed and 51.2 teraflops at FP16. Its TDP is 25W.

They have been developing this chip for a couple of years. The release on May 21 was a formal unveiling of the chip.

Most notably, MTIA maximizes parallelism and data reuse for efficient workload execution. Meta researchers compared it to an NNPI accelerator and a GPU, showing that MTIA is more efficient for low-complexity models. The team also aims to find a balance between computing power, memory capacity, and interconnect bandwidth to optimize performance for Meta’s specific workloads.

It’s clear that Meta is putting all of its chips on the table investing heavily in AI. It remains to be seen just how profitable the MTIA is. An in-house chip specifically for Meta’s AI needs seems like a natural development in the AI boom. It cuts costs and gives the company full control over development while shielding it from any problems in the supply chain. And this is very likely the next step for all major AI companies. For example, there are already rumors of Microsoft funding the chipmaker AMD (Nvidia’s competitor) for its own custom AI chips. Intel is another chipmaker that could be partnered with. For low-level tasks, it’s remarkably more cost-effective to go with a fully in-house designed chip that uses a fraction of a GPU’s TDP. Not being locked into Nvidia data centers can be a boon when done right. It can cut costs by a lot. But these data centers offer convenience and scalability, not to mention there’s no need to spend millions in R&D and manufacturing. Designing silicon chips is an expensive affair, after all.