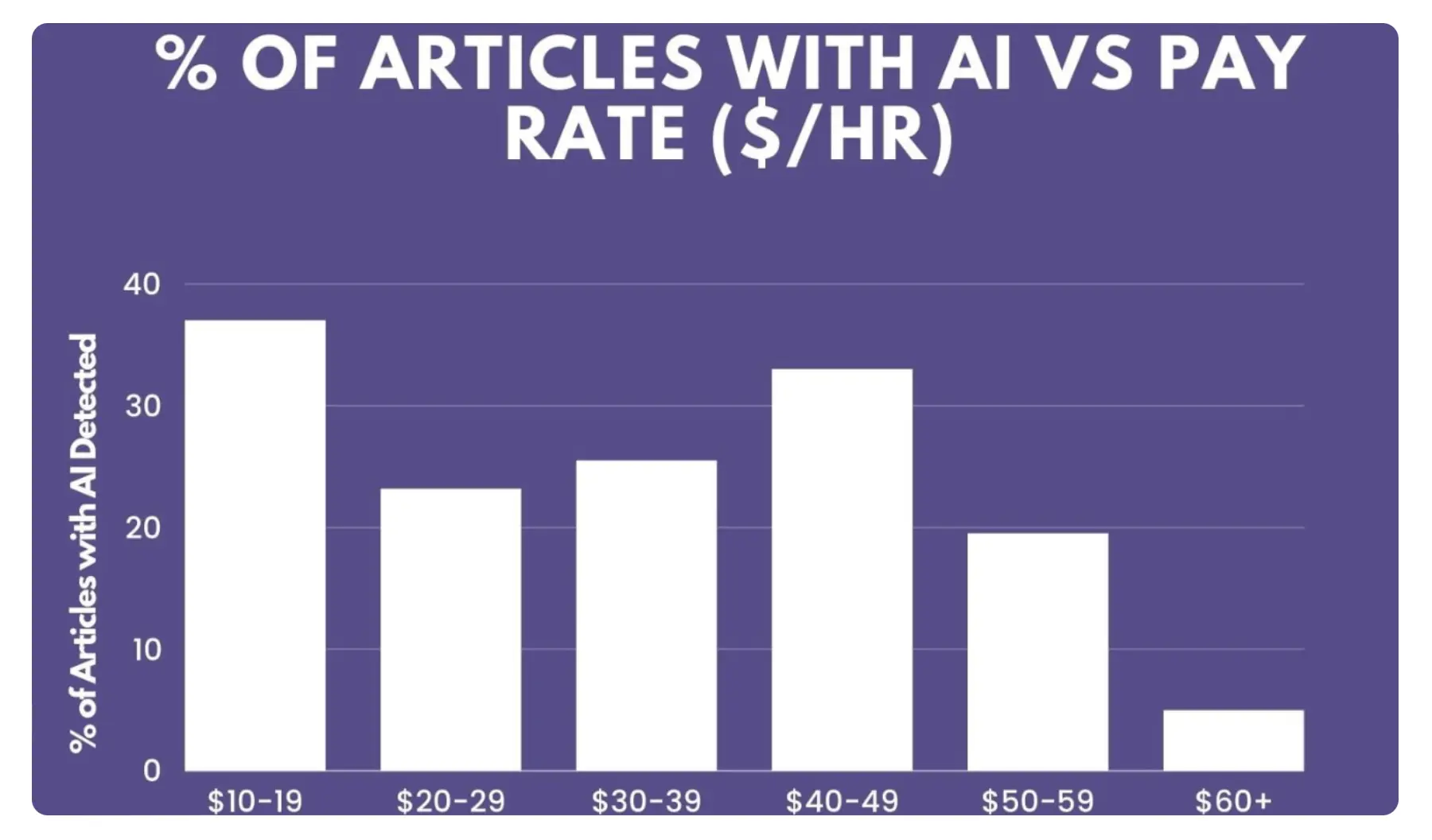

A new report by an AI content detector finds that as many as 37% of freelancers on Upwork could be using AI tools in the $10-19 per hour bracket.

Originality AI is an AI content detector used by people and organizations concerned about their writers using tools like ChatGPT, Gemini, etc. to write their content. The entire industry of SEO has been a mixed bag of results – whereas some say AI-generated content gets devalued instantly, others claim their pages keep ranking even with AI language. Google has no hard rule against using AI to generate text for your articles, but the text must not be low-quality or useless. As long as you can do that, it can rank, as exemplified by countless webmasters who have fine-tuned AI-based content creation workflows for their well-ranking websites.

The study by Originality reveals a fact we all know – writers are increasingly using AI tools to help with their writing. Some are creating entire articles, others are using these tools to reduce their research time, and yet others are using it to generate a chunk of text before they edit it.

The highest users who reported using AI were in the lowest hourly range group of $10-19. It makes sense in two ways, that these people are making less so the clients they work for might have lower quality requirements and no access to premium AI detectors; and because people charging the lowest rates are likely to be non-native English speakers who’d do a worse job in grammar or style if they wrote the article themselves.

In the highest pay range ($60/hour or more), AI usage was the lowest (but still present).

On average, 24% of Upwork writers use AI. I’d wager the actual number of writers using AI in some capacity is much higher. It’s not impossible to fool AI detectors with the right prompting or manual tweaking.

Note that this is not a survey of writers. It’s just a study of using the detector on a bunch of samples from Upwork. Originality AI is an AI content detector. As a freelance writer myself, sometimes my clients ask me to check my copy against this detector. I have to say that it keeps flagging parts of my content as AI even though it’s written by me (a trait shared by many such AI detectors). The hassle of rewriting parts of my own text aside, Originality is not very accurate, simply because no AI detector can be reliably accurate unless all AI text carries a watermark of some sort. For example, it might use a pattern or choice of words to call a piece of text “likely AI-generated” but it could’ve been human-written. That being said, Originality AI is exceptionally good at detecting base ChatGPT or Gemini content created without much fine-tuning or with simple prompting.

This study is Originality AI’s review of articles by Upwork writers in different price ranges. They used their own tool to judge how much of the content was AI. More precisely, they say how many of the writers had at least some part that’s likely to be AI-generated as per their detector.