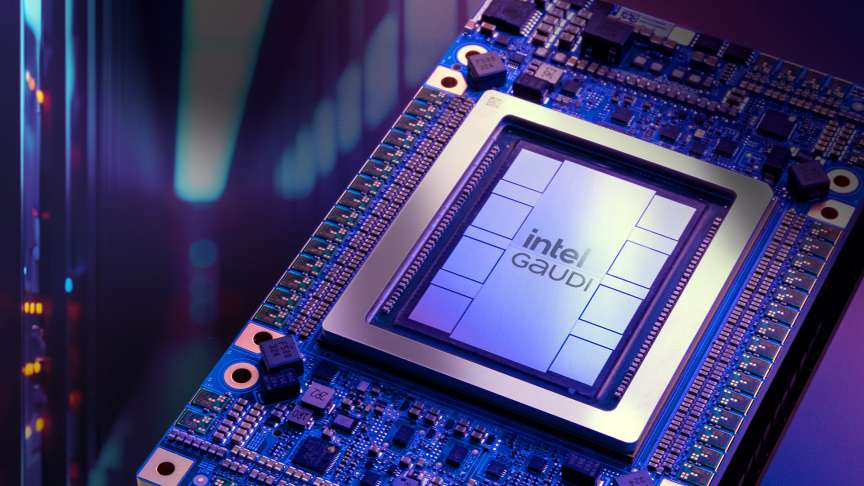

Intel’s Vision 2024 announcement included details on its new chip, Gaudi 3. The chip is reported to perform 50% better for AI inferencing while consuming 40% less power and costing a fraction of the Nvidia H100, the current go-to AI chip for most companies.

As competition ramps up, Intel is legitimately into the fray. As per their own announcement, “Intel goes all-in” for enterprise-grade AI applications. In its comprehensive AI strategy, it introduced Gaudi 3, the next-generation AI accelerator or chip. On average, it was reported to offer 50% better inferencing than Nvidia H100 while being cheaper and less power-hungry.

Dell, HP, Lenovo, and Supermicro (a data center and server management company) are the OEMs that Intel has partnered with right now to roll out the new chip. Customers include Airtel, Bosch, and IBM.

Intel Vision 2024 was loaded with a lot of information on the company’s action plan to tackle the demand for AI applications head-on. The news report published on Intel’s website introduces Gaudi 3, which is a major improvement over Gaudi 2. The testing against Nvidia H100 was done on Llama 2 with 7B and 13B parameters as well as GPT-3 175B.

AI inferencing is the process of training an LLM like Llama or GPT. 50% faster time-to-train on average means there’s a new king in town. At the same time, nothing less could be expected given the amount of investment being poured into this fast-evolving segment by the world’s largest chipmaker (even though AMD has been gaining on it, Intel still keeps the lion’s share of 69% when it comes to PC and server CPUs).

Notably, Nvidia is long past H100’s achievements. It’s developing its own next-generation inferencing chips that also offer monumentous gains over the H100, which feels like yesterday’s news now. That being said, H100 is still the go-to chip for the majority of companies training LLMs. It’s still the staple of many data centers that are being used to train and run AI models.