Google has published new material on the improvements in its everyday cleaning robots working on ML.

In a detailed update a little over a week ago, Google published its research into ML-enabled cleaning robots. Repetitive tasks such as folding clothes and wiping surfaces are ideal for robots, the article on Google’s AI blog. But it also presents challenges due to the variable environments of homes and offices.

Google is working on robots that can do these tasks easier but there are obstacles, both literal and figurative. Navigating physical obstacles, avoiding spilling, and solving stochastic dynamics are a few of these challenges.

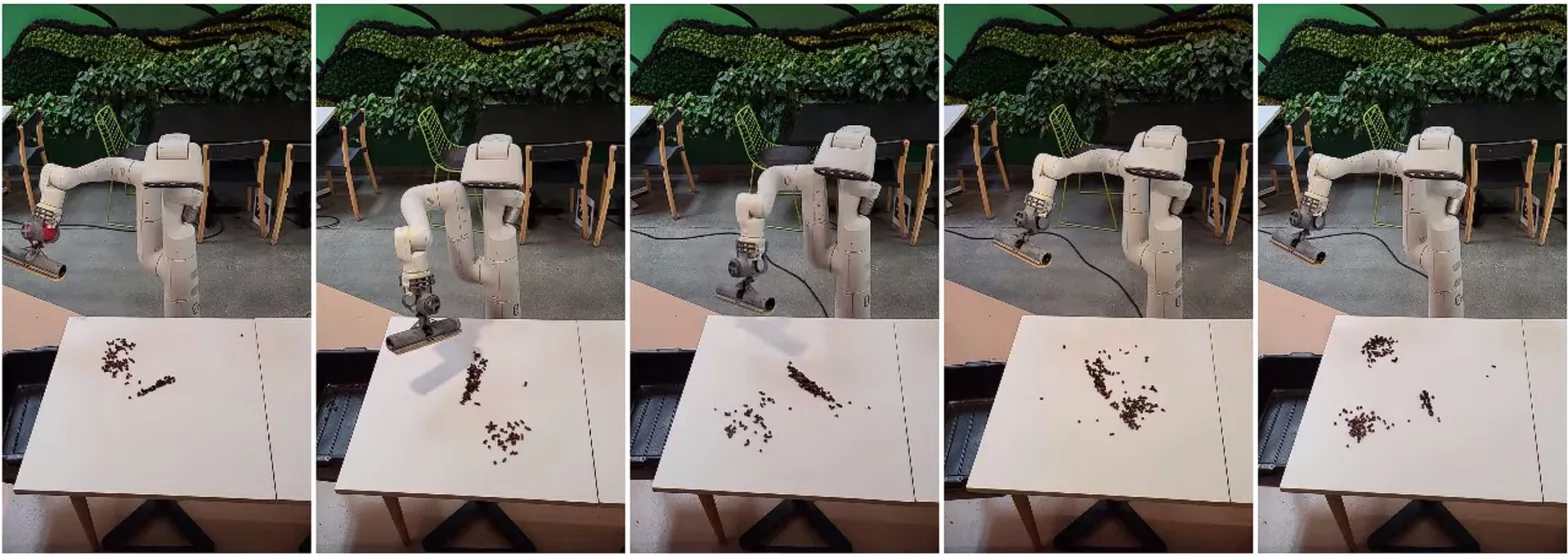

The authors have illustrated the problem and its potential solution, or the direction thereof, with the help of detailed analyses and videos. Remarkably, the policies outperformed the baselines. The new approach uses stochastic differential equation (SDE) simulations to generate large amounts of data, allowing the robots to train wiping policies (in real-time) more efficiently.

The approach has been validated thoroughly on hardware and in simulations.

Notably, the research paper quoted by Google was published back on October 19, 2022. But that’s not where research into these robots began at all. Google has been working on such robots for several years now. Almost one year before that, X (one of Google parent Alphabet’s innovation labs/divisions, formerly known as Google X) Chief Robot Officer blogged about how his team is working toward a world where robots are an integral part of our everyday lives, essentially working on the same machine that Google is talking about right now.

Google currently uses RL, or reinforced learning, to enable robots to plan high-dimensional observation spaces with the help of cameras and optimize trajectories.

The article proposes a novel workflow beginning with sensing the environment and planning high-level wiping waypoints with the help of RL and ending with computing trajectories with control methods and ultimately executing the planned trajectories using the low-level controller.

The more advanced approach can do a lot more than basic robots can. For example, it can fundamentally distinguish dry objects and liquids absorbed while simultaneously capturing multiple isolated spills. Modeling uncertainties of spills and crumbs, the robots are faster and simulations give a time in milliseconds.

This is certainly a major breakthrough but not something too unexpected given the significant research going into robotics by Google’s labs.

The prototype wipes tables using a squeegee and can learn to grasp cups and open doors.

Google is also reported to shut down Everyday Robots, another lab that spawned from X in the first place to build, well, everyday robots. In a recent story, Wired reported how Alphabet is shuttering the company and consolidating personnel and equipment within Google Research as part of its cost-cutting layoffs.