529 participants could detect deepfake speech 73% of the time in English and Mandarin.

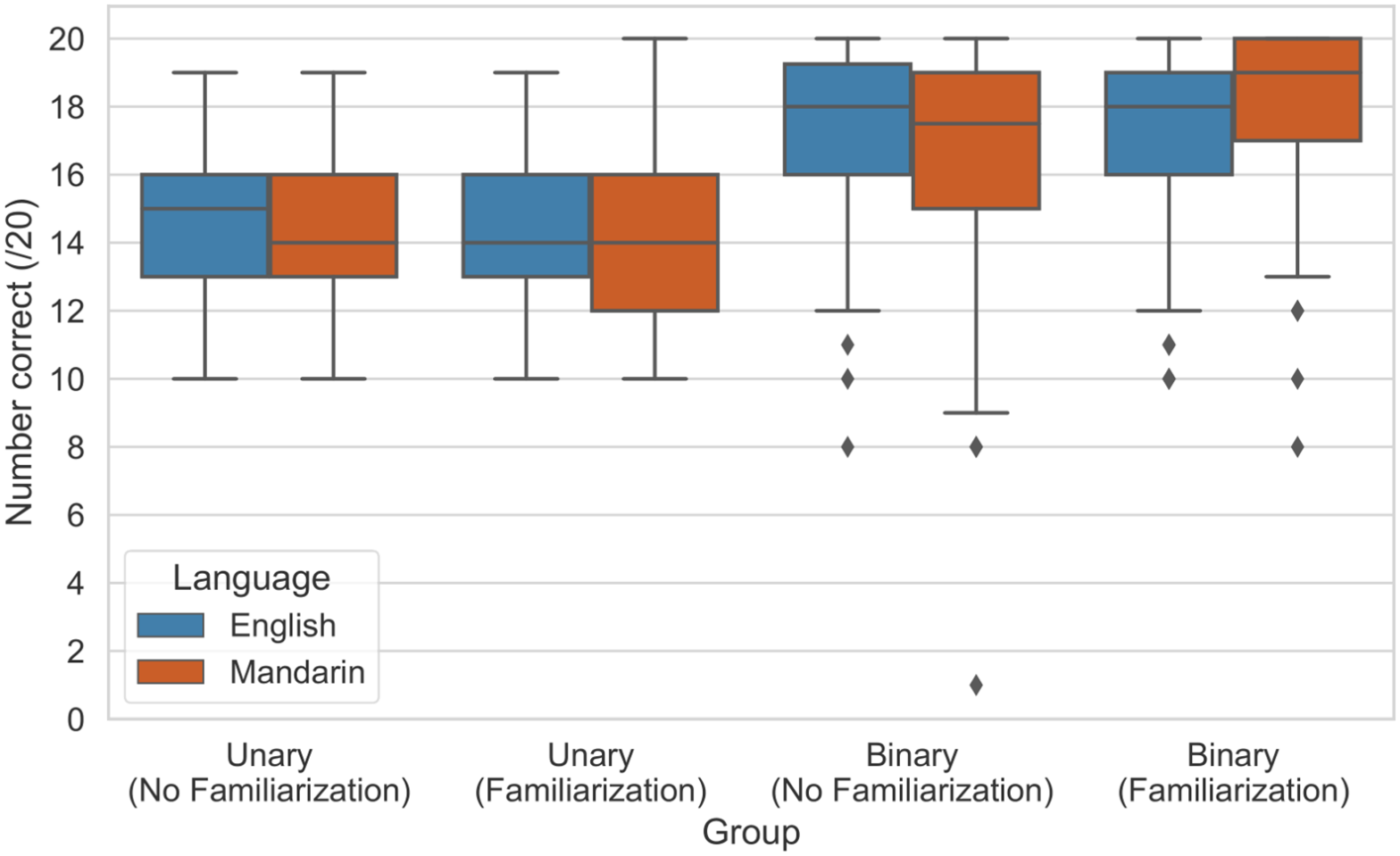

In a new University College London study, researchers found that humans can detect deepfake speech 73% of the time in English and Mandarin. The researchers used a text-to-speech algorithm to generate 50 deepfake samples of voice in each language and played them to 529 participants.

27% of the time, people could not tell apart a deepfake from a real voice. Most notably, efforts to improve the figure yielded little to no results. For example, listing to shorter clips and listening to the clips several times had no change while telling participants about recognizing deepfake voice only improved the score by 3.84%.

This is alarming because it foretells a future where realistic deepfake voices can be used to change public opinion and do harm. Not to mention that this gives phone scammers a new way to sound more realistic.

The study is titled “Warning: Humans cannot reliably detect speech deepfakes” (PLOS ONE | PubMed | arXiv).

Humans can detect speech deepfakes, but not consistently. They tend to rely on naturalness to identify deepfakes regardless of language. As speech synthesis algorithms improve and become more natural, it will become more difficult for humans to catch speech deepfakes.

Kimberly T. Mai, Sergi D. Bray, Toby Davies, Lewis D. Griffin—Peer-reviewed research paper, Public Library of Science

Additional findings:

- Pauses, tones, and other features were used by some people to classify utterances better.

- Humans generally classify based on “naturalness” and “robotic” qualities.

- The crowd performance was on par with the performance of automated deepfake detectors and was more reliable.