A new multimodal AI model can match GPT-4’s performance while being free to train and use.

Four researchers from the University of Wisconsin-Madison, Microsoft Corporation, and Columbia University have created a new multimodal model that has both, visual and language capabilities, and released it under an open-source license, free to be used by anyone.

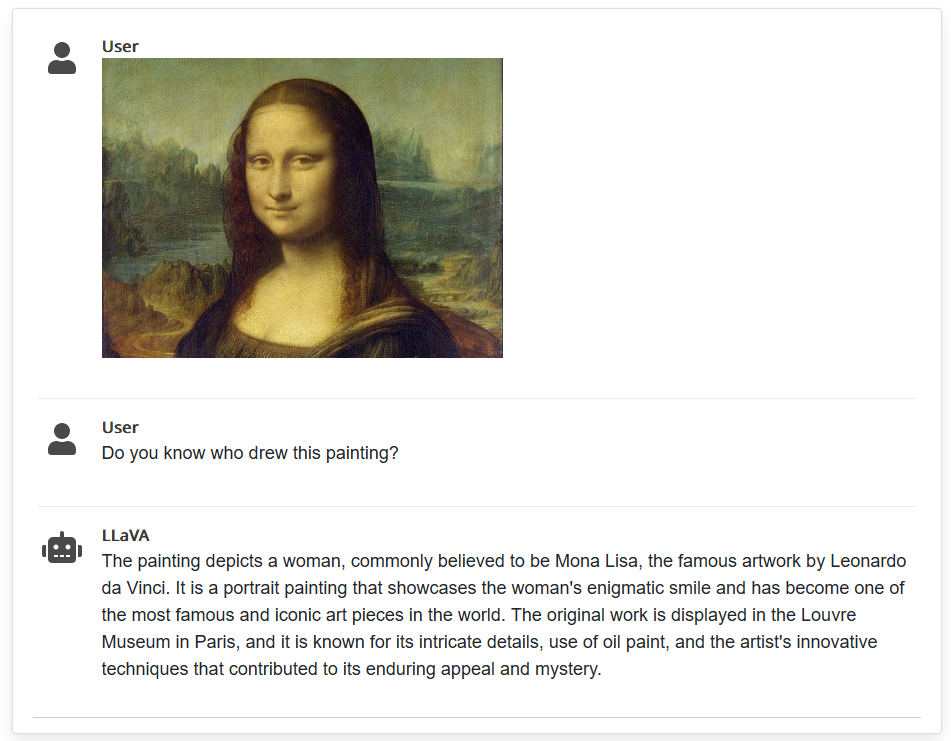

Users can try the LLaVA model’s image and language processing capabilities on a demo page.

The model, called LLaVA (Large Language and Vision Assistant) has comparable performance to the industry’s leading multimodal model, GPT-4 by OpenAI, which is currently used in ChatGPT Plus and Enterprise.

LLaVA demonstrates impressive multimodel chat abilities, sometimes exhibiting the behaviors of multimodal GPT-4 on unseen images/instructions, and yields a 85.1% relative score compared with GPT-4 on a synthetic multimodal instruction-following dataset. When fine-tuned on Science QA, the synergy of LLaVA and GPT-4 achieves a new state-of-the-art accuracy of 92.53%.

Visual Instruction Tuning (arXiv link), paper in Computer Vision and Pattern Recognition

The researchers, Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee, have specializations in fields including computer vision, machine learning, natural language processing, generative AI, deep learning, and languages. Outlining their process and goals, they published the research paper called Visual Instruction Tuning, introducing the LLaVA end-to-end large multimodal model connecting a vision encoder and an LLM for “general-purpose vision and language understanding.”

The official GitHub repository has all the information for configuration and training. All weights including the Conversation 58k, Detail 23k, and Complex Reasoning 77k, are freely available on Hugging Face as well.

The official webpage for the LLaVA model clarifies how the model uses CLIP VViT-L/14, a visual encoder, and Vicuna, a large language model, in order to achieve its multimodal capabilities. It’s essentially a connection of two different models—One for image processing and another for natural language processing. First, the model was trained to improve alignment and then fine-tuned for specific use cases such as visual chat and science QA. In theory, the model’s dataset can be tweaked and it can be trained for any purpose with near-GPT-4 accuracy.

Keeping GPT-4 as the judge, LLaVA attains as much as 92.53% accuracy. The model also has OCR reading capabilities.

Setting it up is as easy as cloning the GitHub repository, setting up a Python environment, downloading the pre-trained model weights (or training your own), and launching a controller or web server. For training, users can follow the same route as used by the researchers for the pre-trained weights—Feature alignment and visual instruction tuning.