OpenAI is looking at other options, including making its own chip for AI inferencing and training tasks.

ChatGPT’s parent company OpenAI is looking to design its own AI chips. The company has gone as far as evaluating a potential acquisition target but hasn’t yet decided to proceed. Notably, the company has discussed multiple options since last year to solve the problem of AI chip shortage. Currently, Nvidia is the only major supplier of such chips used for AI training and other purposes that all AI companies need, inflating the value and reducing the demand for these chips on the market.

Reuters originally reported this development based on people familiar with the company’s plans and internal discussions that were described to the platform. They covered it in an exclusive report.

The multiple options that OpenAI is reportedly looking at happen to include working with other chipmakers (which include Nvidia’s competitor AMD, a company also amping up its AI chipmaking capabilities and reportedly partnering with Microsoft for custom chip design).

The news comes amid a global shortage of AI chips in a world where new AI startups are springing up in dozens every week, though most of them are not training their own models. Training a language model used behind a generative AI tool or other machine learning tasks requires specialized hardware close to GPUs used in gaming (and for a short time, in cryptocurrency mining). Incidentally, the demand for AI chips and the company that makes them is bound to skyrocket, even having Nvidia touch the coveted $1 trillion market cap roughly 4 months ago.

OpenAI CEO Sam Altman has previously hinted at his frustration with the lack of AI chips when the company aims to scale ahead. He has complained about the shortage of GPUs in the past as well in an interview where Altman was “remarkably open.” OpenAI has since taken down the interview, but an archived version can be found hosted on a Vercel-based website, whose June 1 capture can be found on the Wayback Machine in case the original is edited.

Currently, OpenAI is doing the heavy lifting using Microsoft’s supercomputer using 10,000 Nvidia GPUs.

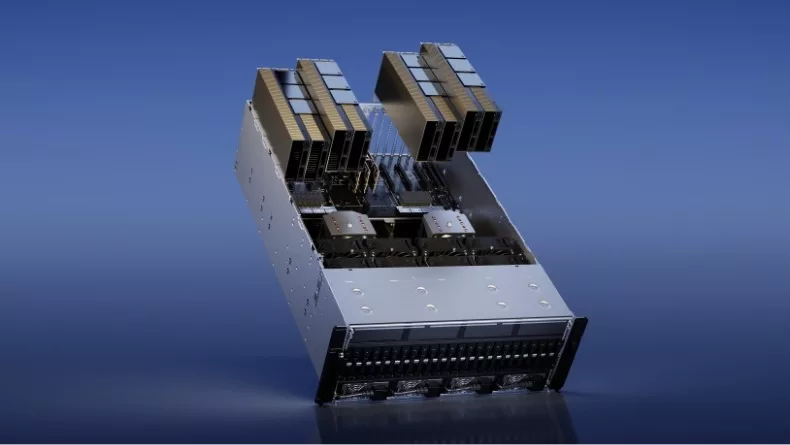

In picture: Nvidia’s H100 “AI chip,” a GPU that has supported the inferencing or training job for language models behind AI tools, including OpenAI’s ChatGPT and Google’s Bard, among countless other big companies and startups. Nvidia has plans to make AI inferencing more cost-effective with its new chip, but the costs are still quite heavy to rent or buy hardware that can do this job.