Lumiere is a new offering from Google Research that can convert text or images into video clips that look stunning and practicable.

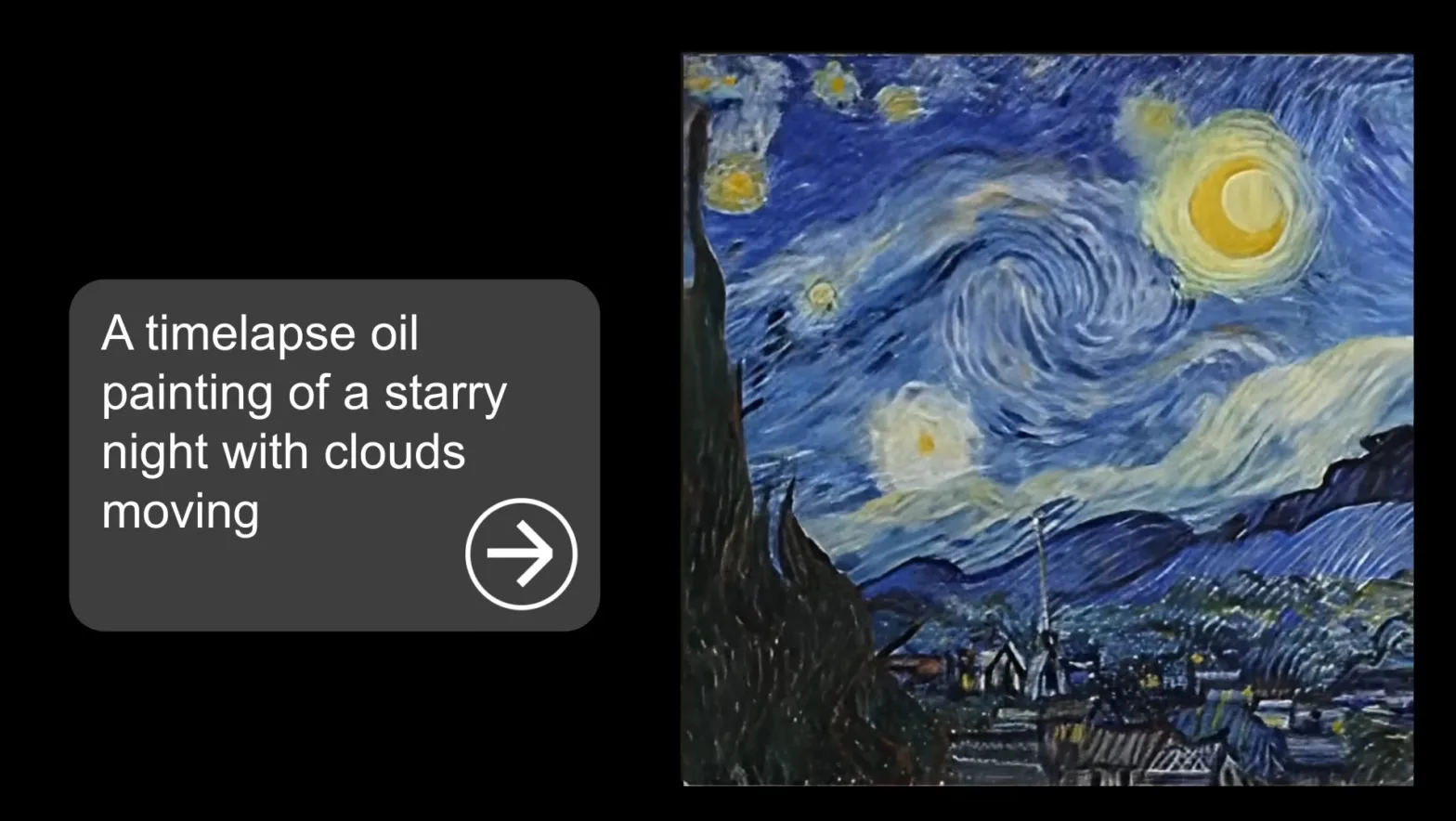

With robust text-to-video, image-to-video, reference-based stylization, selective animation, and video editing capabilities (like selecting the dress in a video and changing it to something else), Google Research has teased a quite powerful-looking tool dubbed Lumiere.

The page on GitHub goes into the details of its various capabilities and the research paper outlines the process of its creation and usefulness. The video showcases the capabilities of the tool:

Lumiere is a space-time diffusion model for video generation. It can synthesize videos using realistic motion that doesn’t look awkward, robotic, or overly fake. The model can generate the entire duration at once using a single pass, which is remarkable. Other models use an approach of multiple passes and iterations to arrive at the final output.

It’s been made possible using a bunch of technologies such as spatial and temporal up-sampling and downsampling and a pre-trained diffusion model. The model also allows for video inpainting, meaning you can select a part of a video and ask it to edit across all frames, the entire length, much like what Stable Diffusion does for images.

The stylized generation aspect is also pretty cool, allowing you to specify a reference image, whose style the model will copy to create the video. In other words, you can make a clip of a cat driving a car in a cyberpunk setting, hand-drawing art style, or in the style of a famous painter.

Lumière is French for ‘light’.