AMD’s upcoming MI300 chip will compete for a slice of the AI training market alongside powering the world’s then-fastest supercomputer.

Chips or GPUs that train LLMs are mainly made by Nvidia. Companies like Meta, Google, Microsoft, and OpenAI have all used Nvidia’s data centers to train their models thanks to the AI accelerator cores present on these chips which speed up the learning process. Chipmaker AMD is Nvidia’s competitor and is now eyeing this market with its upcoming Instinct MI300 “superchip.” It’s a data center APU with powerful AI capabilities.

Official page: AMD Instinct Server Accelerators

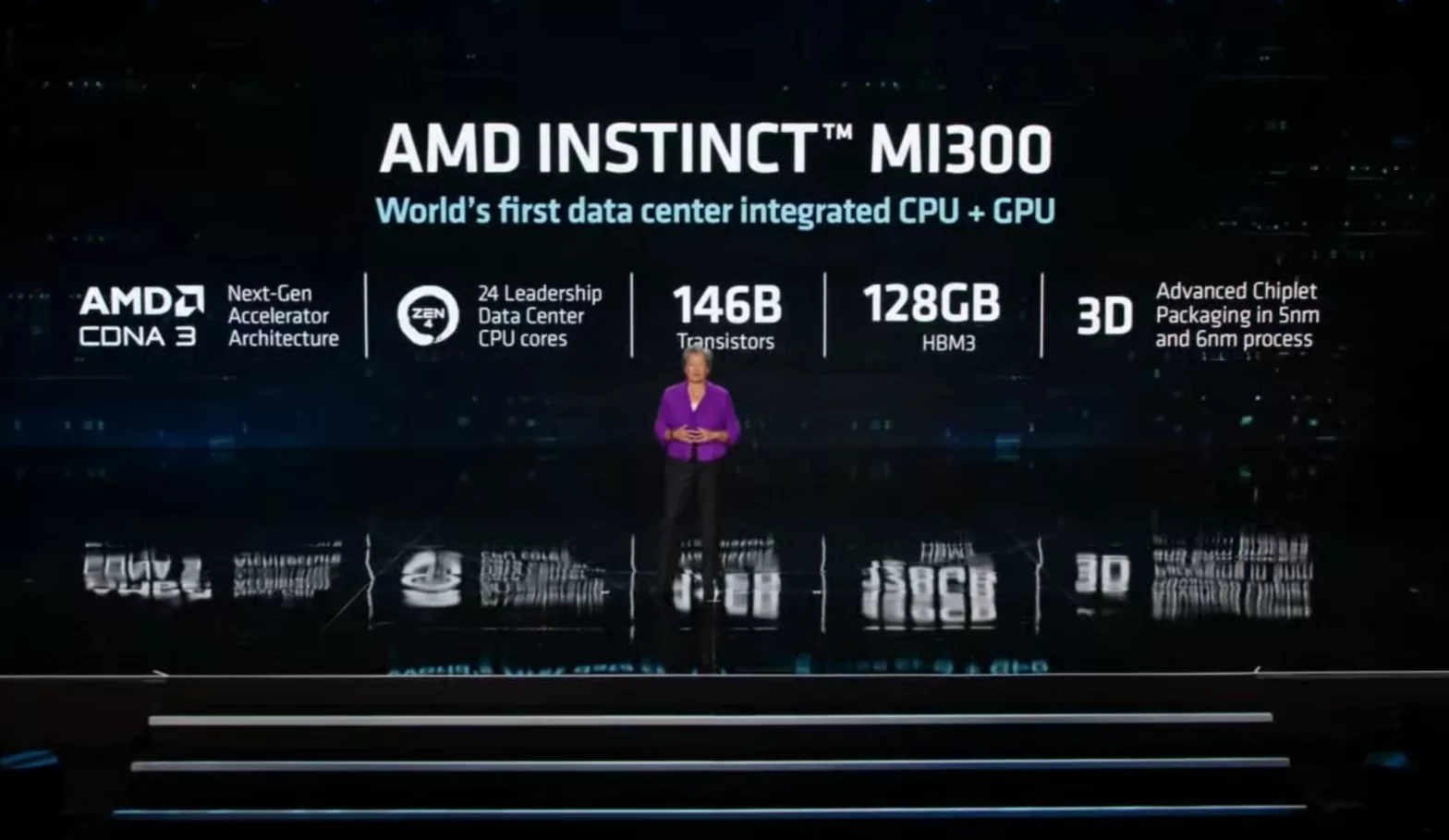

The MI300 has 146 billion transistors, a CPU, GPU, and memory all packed into one wafer of silicon, which hasn’t been accomplished before. The chip is about to launch in the second half of 2023. Featuring 24 CPU cores of Zen 4 architecture and 13 total chiplets, it’s poised to compete with Nvidia’s Grace Hopper and H100 chips.

As we go into the second half of the year and the launch of MI300, sort of the first user of MI300 will be the supercomputers or El Capitan, but we’re working with some large cloud vendors as well to qualify MI300 in AI workloads. And we should expect that to be more of a meaningful contributor in 2024.

AMD CEO Lisa Su during Q4 earnings call:

AMD’s data center business has grown 42% since last year. This segment isn’t for AI training specifically, as the biggest clients are in the financial services, tech, energy, automotive, and aerospace sectors that require high-performance computing. AMD’s EPYC processors are the main driver of growth in this segment.

It’s also noteworthy that AMD powers 100+ of the world’s fastest supercomputers with reasonable energy efficiency.

We expect AI adoption will accelerate significantly over the coming years and are incredibly excited about leveraging our broad portfolio of CPUs, GPUs and adaptive accelerators in combination with our software expertise to deliver differentiated solutions that can address the full spectrum of AI needs in training and inference across cloud, edge and client.

Lisa Su

Lawrence Livermore National Laboratory’s upcoming supercomputer called El Capitan, to be 7X faster than the world’s fastest supercomputer today, will use AMD’s MI300 superchip. El Capitan will also be the world’s first exascale supercomputer. The current fastest supercomputer, Frontier, is also powered by an AMD chip.

The Department of Energy is the world leader in supercomputing and El Capitan is a critical addition to our next-generation systems. El Capitan’s advanced capabilities for modeling, simulation and artificial intelligence will help push America’s competitive edge in energy and national security, allow us to ask tougher questions, solve greater challenges and develop better solutions for generations to come.

US Energy Secretary Rick Perry