Inflection AI’s cluster using 22K Nvidia H100s will perform at 1.474 exaflops (FP64), second only to The Frontier.

Inflection AI, a one-year-old startup, raised $1.3 billion from investors including Nvidia and Microsoft. This values the company at $4 billion. The company is founded by Google DeepMind co-founder Mustafa Suleyman and LinkedIn co-founder Reid Hoffman. Suleiman said:

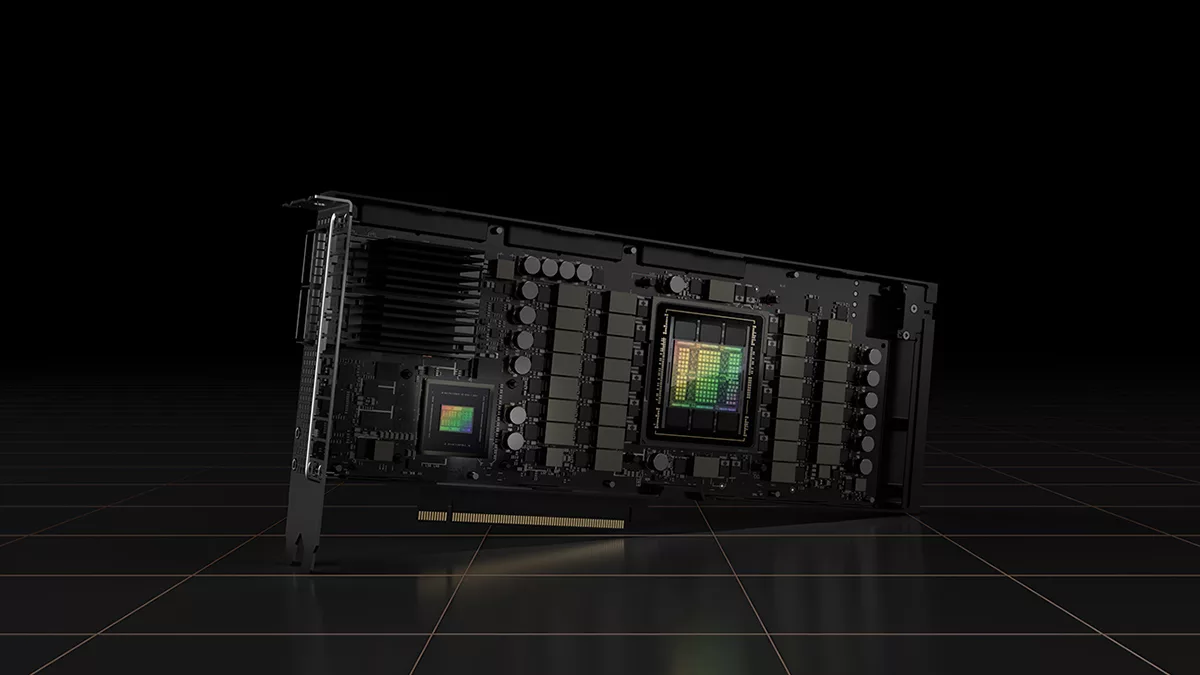

We’ll be building a cluster of around 22,000 H100s. This is approximately three times more compute than what was used to train all of GPT4. Speed and scale are what’s going to really enable us to build a differentiated product.

Mustafa Suleyman, Founder, Inflection AI during Collision Conference

The 22,000 Nvidia H100 GPUs will make this a supercomputer or cluster second only to The Frontier. Inflection AI aims to develop personal AI for everyone and is a leading OpenAI competitor.

- 22,000 Nvidia H100 GPUs

- 2,750 Intel Xeon CPUs

- 1.474 exaflops at FP64

The company recently released the Pi chatbot powered by the Inflection-1 model, which is currently behind Google’s LaMDA and ChatGPT. Pi is more geared toward common sense tasks for personal assistance applications.

As a single Intel Xeon CPU can power up to eight Nvidia H100s, this new supercomputer cluster will have nearly 690 racks of four-node Intel Xeon CPUs and will reportedly use 31 MW of power.

It’s notable that Nvidia’s H100 GPUs are extremely hard to acquire, with most companies instead relying on Nvidia’s cloud data centers to train their language models. Procuring 22,000 such GPUs in today’s AI boom is a feat currently unparalleled, mainly supported by the fact that Nvidia is investing in the development of the new cluster.

Inflection-1 will improve remarkably with the help of this new cluster and close its gap with both, ChatGPT and Bard, especially in coding.

Currently poised to be #2, the El Capitan and Aurora supercomputers will still be faster, making it #4 instead.

Related coverage:

- Microsoft-backed AI startup Inflection raises $1.3 billion from Nvidia and others (Reuters)

- Inflection AI Develops Supercomputer Equipped With 22,000 NVIDIA H100 AI GPUs (Wccftech)

- Startup baut Supercomputer mit 22.000 H100-GPUs (HardwareLUXX – German)

- Startup Builds Supercomputer with 22,000 Nvidia’s H100 Compute GPUs (Tom’s Hardware)

- Inflection AI Builds Supercomputer with 22,000 NVIDIA H100 GPUs (TechPowerUp)

- Inflection AI just created a $880,000,000 AI supercomputer (Rowan Cheung on Twitter)