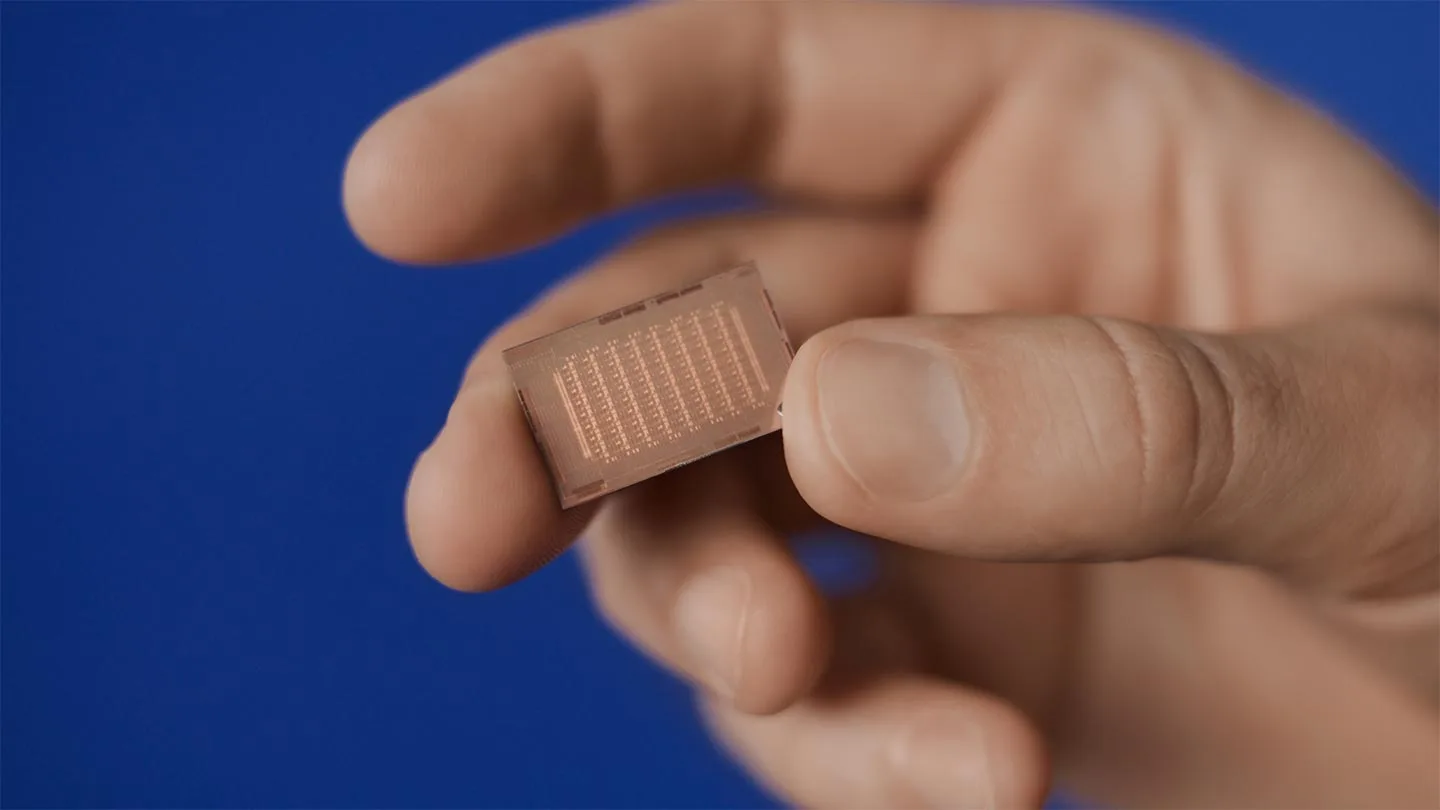

MTIA v2 is Meta’s latest AI inferencing accelerator chip. It’s 3x better than the v1 while being 1.5x more power-efficient.

As the chip wars rage on, Intel having announced its Gaudi 3 recently, Meta is jumping into the fray with its next-generation AI inferencing (model training) chip, far superior than the previous one. Meta is investing in custom silicon and its novel chip design so that it doesn’t have to rely on companies like Intel and Nvidia. It’s not directly competing with these companies (at least not yet), because the MTIA family of chips are meant for internal use only.

As such, it’s an investment the company is making. Apart from training their open-source models like Llama on it, the AI accelerators are being used to train advertising algorithms of Facebook and Instagram.

Notably, Meta is just getting started. The announcement reads, “We currently have several programs underway aimed at expanding the scope of MTIA, including support for GenAI workloads.” Will we be able to ask Facebook itself to generate a reply to a friend’s chat message who we don’t really care about? Well, it’s more likely that Meta will prioritize use cases such as creating ad copy variations in the Ad Manager or generating captions for your posts within the Meta Business Suite.

MTIA v2 (for some reason that’s not what they’re called it, even though they’re calling the first one MTIA v1) is based on TSMC’s 5nm process node, the same logic technology powering things like some of AMD’s RDNA 3 GPUs and Zen 4 CPUs and Apple’s A14 chips (iPhone 12).

Though originally meant to improve the performance of the search, recommendations, and ranking features of many of Meta’s internal algorithms, there are hints that the MTIA v2 will be powering some sort of a generative AI model that might compete with tools like ChatGPT and Gemini. The v2 was codenamed Artemis internally and is just one of the examples of how big tech companies are training their own custom chips instead of relying on companies like Nvidia and Intel (cases in point: Google’s TPUs, Microsoft’s Maia 100, and Amazon’s Trainium).