Mistral AI dropped the magnet link to download its latest and greatest model.

Mixtral 8x22B is Mistral’s latest AI LLM. The torrent magnet link to download the 281.24 GB model was dropped in a tweet a few days ago:

Notably, it’s not coming with an instruct model (is autocomplete) so you can’t directly compare it to something a chatbot uses. People are asking for it everywhere from Hugging Face to Reddit.

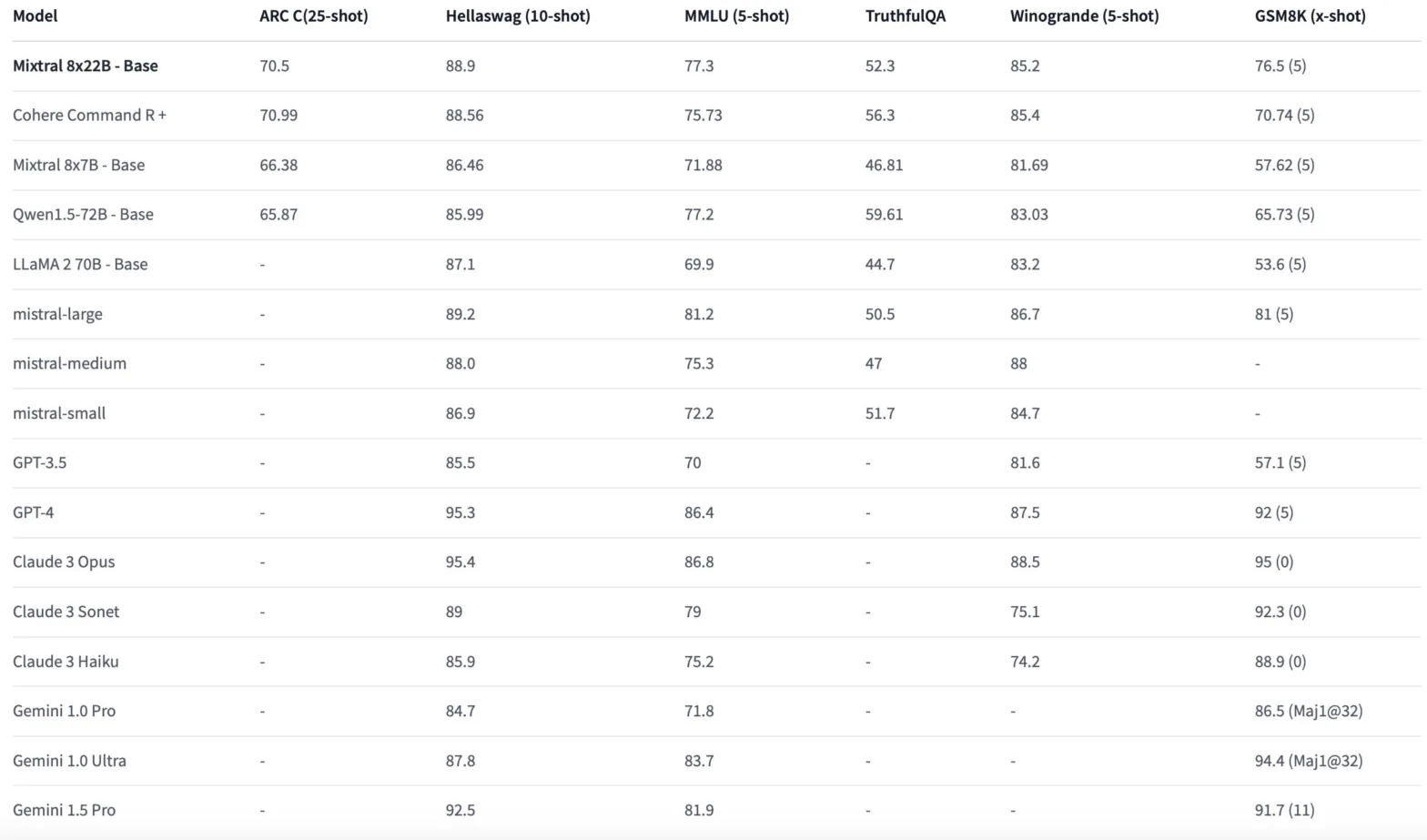

Mixtral is the biggest name in the world of open-source LLMs, though Cohere’s Command R+ has taken the top position, toe-to-toe with GPT-4. That being said, the Mixtral 8x22B model beats Command R+ in certain areas, such as creative writing and the speed of text generation. This is nothing new, it’s just the way it’s always been between the two open-source LLM families.

The new model has a 65k token context window and a 176b parameter size. The release followed a few other releases pretty closely, most notably Gemini Pro 1.5 and GPT-4 Turbo with Vision.