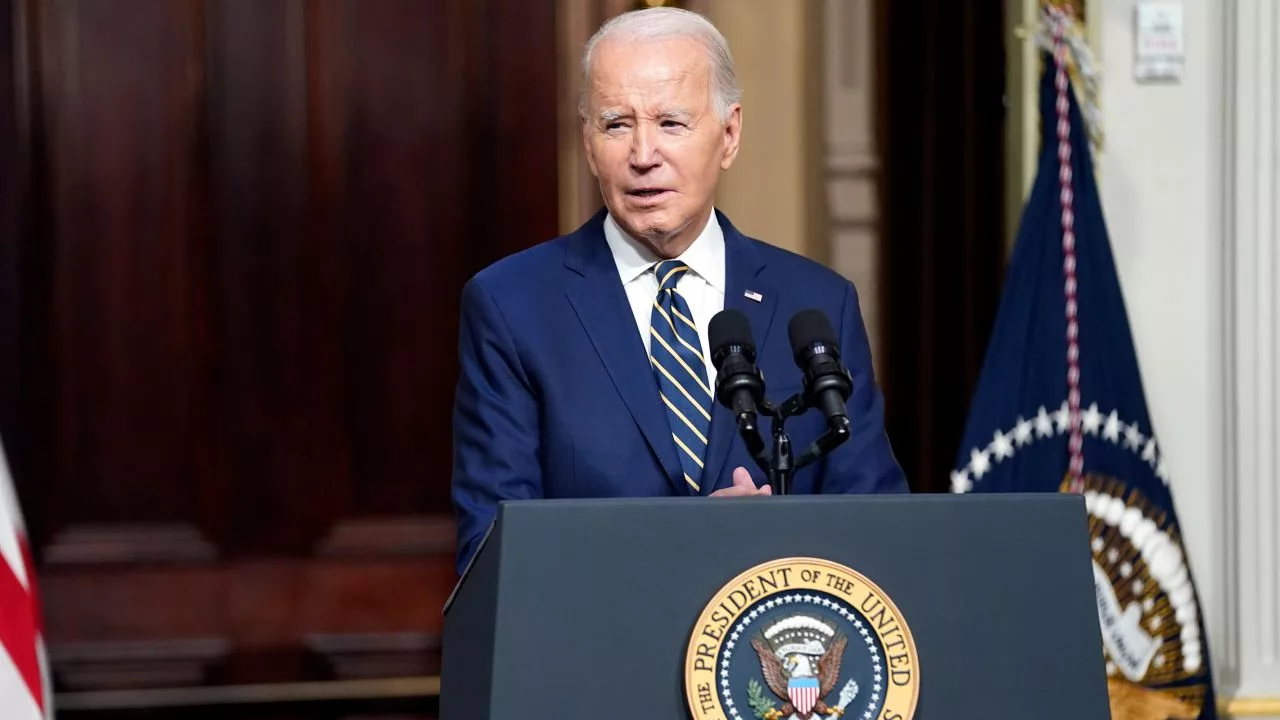

Joe Biden gets 7 of the biggest tech companies to commit to safety standards, internal and external, in their pursuit of better AI models and tools.

In a considerable boost to AI safety efforts, big tech companies including OpenAI, Google, Meta, Microsoft, Inflection AI, Anthropic, and Amazon committed to enacting voluntary safeguards and standards during a White House meeting.

There has been pressure from US President Joe Biden himself to put guardrails in place to ground the meteoric rise of generative AI and avoid any risks.

We must be clear-eyed and vigilant about threats emerging from emerging technologies that can pose — don’t have to but can pose — to our democracy and our values. This is a serious responsibility; we have to get it right. And there’s enormous, enormous potential upside as well.

Joe Biden, US President

You can read the fact sheet on the official White House page here.

According to the release, the commitments will be undertaken immediately and include:

- Internal and external security testing of AI systems before release.

- Sharing information with other industry partners as well as the government, civil society, and academia to manage risks. This includes technical collaboration.

- Investing in cybersecurity and insider threat safeguards to protect models, including proprietary generative models and other unreleased model weights.

- Facilitating third-party discovery and reporting any vulnerabilities in the AI systems.

- Developing robust technical mechanisms to let users know when content is AI generated, such as a watermarking system.

- Publicly reporting the capabilities, limitations, and areas of appropriate and inappropriate use of the AI systems being developed and released.

- Prioritizing research on societal risks that AI systems can pose including bias, disinformation, and privacy infringement.

- Developing AI systems for social challenges such as cancer prevention and climate change.

It remains to be seen how will these standards be upheld. Any breach can certainly be grounds for legal action, but reporting all advancements transparently is something that’s up to the companies individually. They can choose to, for example, keep certain tools or models private from others, as the seven companies are more or less competitors or have some overlap in terms of users or tools.

The US government is also moving toward greater international collaboration for AI safety. President Biden also met AI experts and researchers in San Francisco last month.